HEALTH_ERR 64 pgs are stuck inactive for more than 300 seconds 64 pgs stuck inactive 64 pgs stuck unclean However, in my case, I ran into a problem: > ssh node1 sudo ceph health For example: > ssh node1 sudo ceph health Then finally add the OSDs and specify the disk to use for storage: > ceph-deploy osd create node1:sdb node2:sdb node3:sdb TroubleshootingĪfter adding 3 (minimum required is 3) OSDs, their health should show HEALTH_OK when ceph health is executed in the nodes. rw- 1 ceph-admin ceph-admin 73 Aug 17 22:12 Ĭopy the config and keys to your ceph nodes: > ceph-deploy admin node1 node2 node3 rw-rw-r- 1 ceph-admin ceph-admin 125659 Aug 17 22:18 ceph-deploy-ceph.log > llĭrwxrwxr-x 2 ceph-admin ceph-admin 4096 Aug 17 22:18.

Note that this is less than keys indicated in the quick start guide the mgr and rbd keyrings are not present.

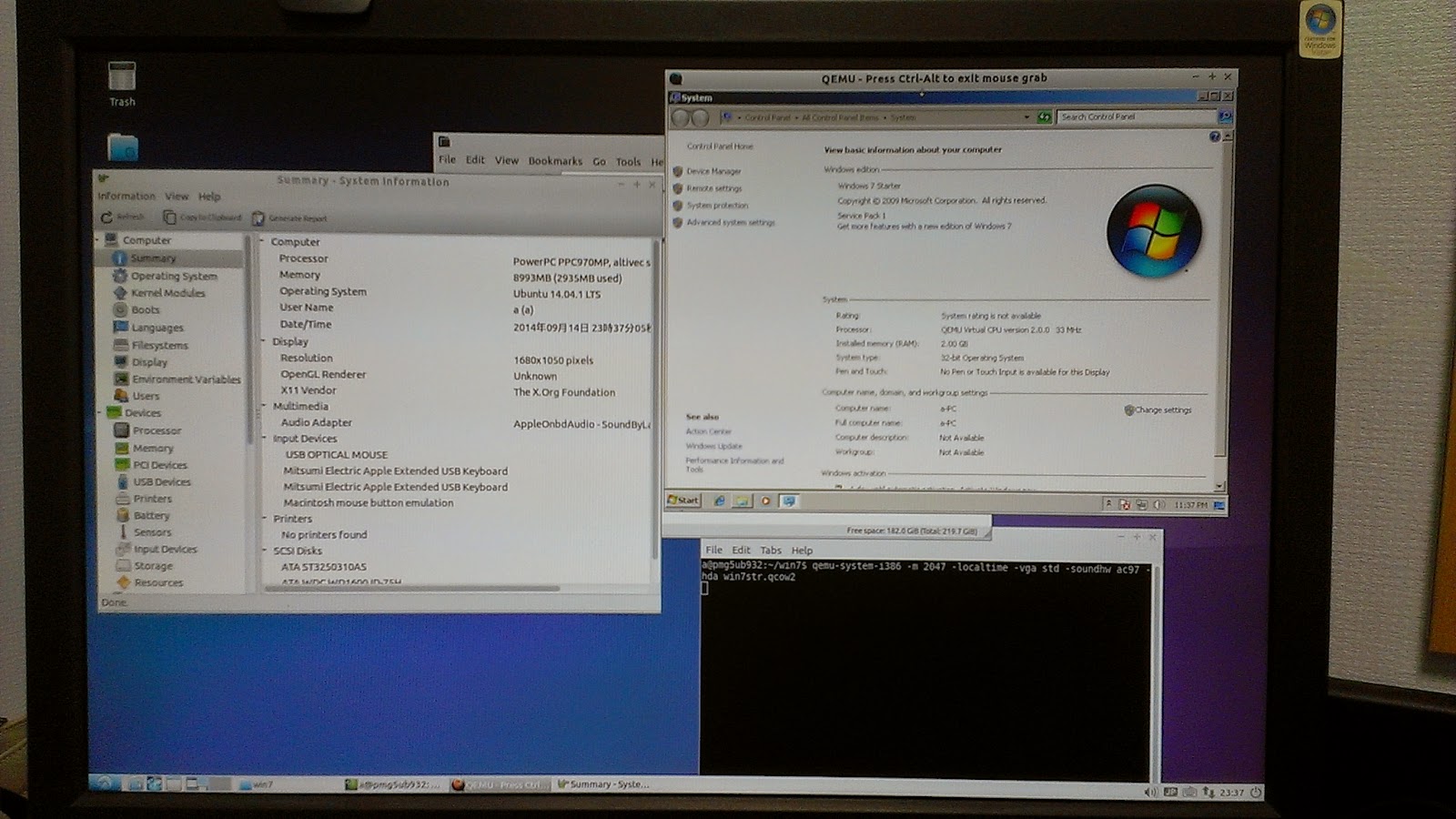

Ubuntu 14.04.2 lts qcow2 install#

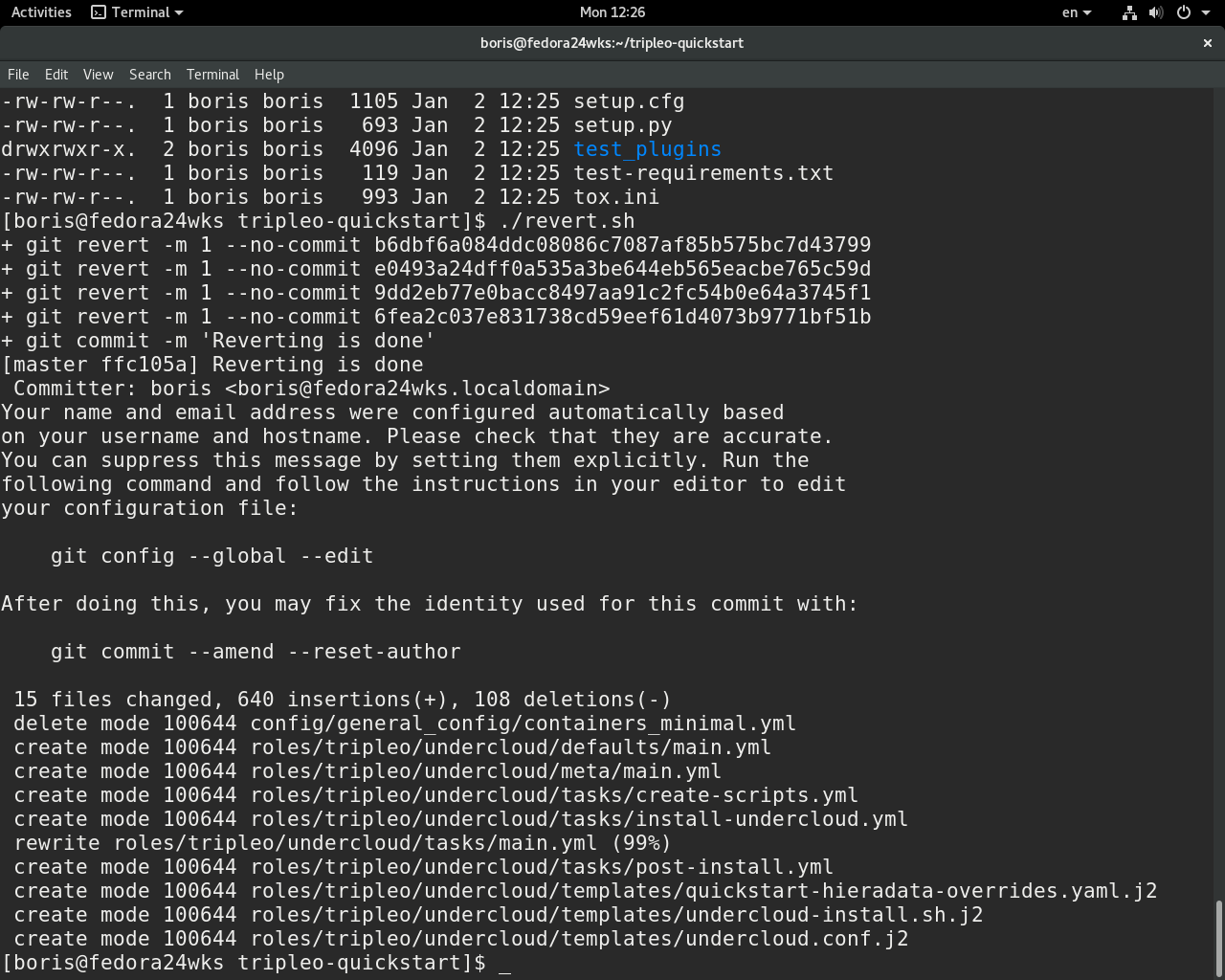

Next, install Ceph packages in the nodes: > ceph-deploy install node1 node2 node3ĭeploy the initial Monitor and gather they keys: > ceph-deploy mon create-initialĪt this point, the directory will have the following keys. A number of files will be created as a result of this command: > lsĬeph.conf ceph-deploy-ceph.log Recall from Part 1 that the first nodes was named node1. > mkdir my-clusterĬreate a new cluster by specifying the name of the node which will run the first monitor: > ceph-deploy new node1

SSH into the admin node as ceph-admin and create a directory from which to execute ceph-deploy. We now move on to setting up Ceph with 1 Monitor and 3 OSDs according to the quick start guide here. In Part 1, the infrastructure required for the initial Ceph deployment was set up on GCE. This procedure was last used in March 2019 April 2020 June 2020 Sept 2020. At this point, the TXT entry should be deleted from the DNS. It validated the new TXT entry and gave me the new certs. 300 or 5m) so that you don’t have to wait a long time for a new value if somehow you screw this process up and have to change the value.Īfter adding the entry, wait a few minutes and press enter to continue with certbot. At this point, I go to my Google Domains web console and added a TXT entry with the name _acme-challenge (or whatever name certbot gives you). Sudo certbot certonly -manual -preferred-challenges=dns -email -agree-tos -d *. -server Ĭertbot will generate a value to be added to a DNS TXT record and says “Press Enter to Continue”. The follow is the command used to generate a wildcard cert for *.: My domain is registered with Google Domains.

Here is what I did to generate Domain Verified wildcard SSL certificates from Ubuntu 18.04 LTS – no ports needs to be opened.

0 kommentar(er)

0 kommentar(er)